The Shocking Energy Bill of Training a Giant AI Model

How much energy does it take to train a huge AI model?

I did some calculations, and the results are surprising.

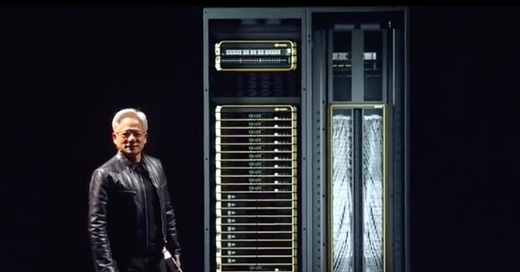

The picture that you see here is 1 GPU.

Let’s look at the LLaMA 70B model, which typically needs:

Training Time: About 21 days

Number of GPUs: 512 NVIDIA A100 GPUs

Here’s the breakdown:

Power Consumption per GPU: 0.3 kW

Total Power Consumption: 0.3 kW × 512 GPUs = 153.6 kW

Training Time in Hours: 21 days × 24 hours/day = 504 hours

Energy Consumption in kWh: 153.6 kW × 504 hours = 77,990.4 kWh

Including Data Center Overhead (20% for cooling): 77,990.4 kWh × 1.2 = 93,588.48 kWh

Equivalent Household Usage: The average U.S. household uses 877 kWh per month

Number of Households Powered: 93,588.48 kWh / 877 kWh/month ≈ 107 households

To put that in perspective, that means the energy used to train this one model could power over 107 homes for a whole month!

Summary:

Training a 70-billion parameter model with 512 GPUs for 21 days consumes about 93,588.48 kWh of energy.

This is enough to power around 107 average U.S. households for one month.

Training large AI models takes a lot of energy.

So, what's the solution?

Well, researchers are exploring ways to make LLMs more energy-efficient.

One approach is using "1-bit LLMs" that require less power for calculations.

Source: LinkedIn Post